- “Fallen angels will all go back up, eventually.”

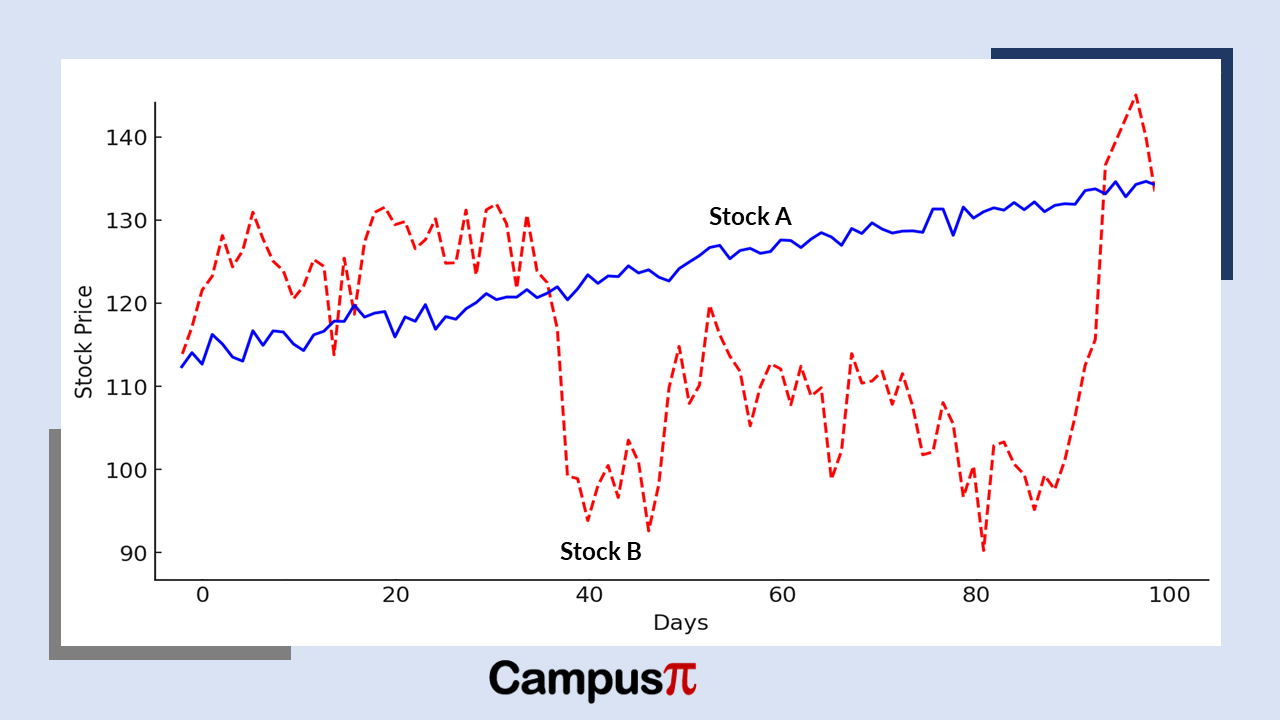

A “fallen angel” is a stock that once performed well but has significantly declined. Believing it must recover is dangerous. Some companies never regain their past glory due to poor fundamentals, bad management, or irreversible industry changes. Blindly holding on can lead to bigger losses. The speculation of the recovery is not guaranteed.

- “Stocks that go up must come down.”

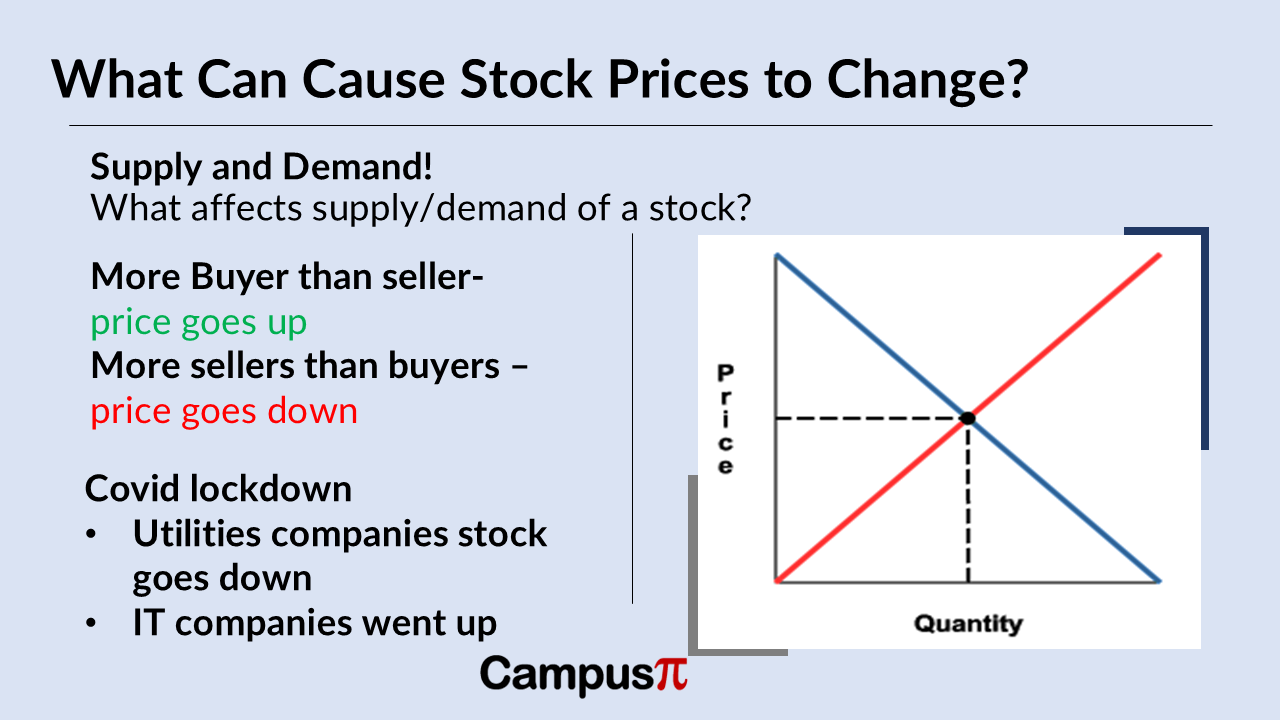

While prices fluctuate, assuming that every rising stock must crash ignores the long-term growth potential of good companies. Many strong businesses increase in value over time due to innovation, expanding markets, and solid earnings. Though corrections are natural, not every rise is followed by a crash.

The saying “stocks that go up must come down” reflects a belief that any rising stock is bound to fall eventually. While it’s true that no stock climbs forever without pauses or corrections, this phrase oversimplifies market behavior. Stocks rise for valid reasons—strong earnings, innovation, or market dominance. While short-term pullbacks are common due to profit-taking or market cycles, fundamentally strong stocks can trend upward over long periods. Assuming every gain will be followed by a major drop can lead to missed opportunities. It’s better to assess each stock on its merits rather than rely on blanket assumptions about price movements.

- “Having just a little knowledge, because it is better than none, is enough to invest in the stock market.”

Believing that a little knowledge is enough to invest in the stock market can be risky. While it’s true that basic understanding is better than none, limited knowledge may lead to poor decisions, emotional trading, or falling for hype and speculation. The stock market is complex, influenced by multiple factors like earnings, interest rates, and global events. Without a solid foundation, investors might misunderstand risks or chase short-term trends. Successful investing requires continuous learning, research, and discipline. It’s wise to start small and keep growing your knowledge. Informed decisions—not just minimal understanding—are what lead to long-term success in the market.

- “The Stock Market Always Reflects the Economy”

The belief that the stock market always reflects the economy is a common misconception. While the market often reacts to economic trends, it primarily reflects investor expectations about future performance, not current conditions. Stock prices are influenced by sentiment, corporate earnings forecasts, and monetary policies, rather than real-time data like unemployment or GDP. This disconnect is evident during crises, when markets may rise despite economic downturns, anticipating recovery. Conversely, strong economic indicators may not always boost markets if expectations were already high. Understanding this gap helps investors make more informed decisions rather than relying solely on market movement as an economic signal.

- “Stocks Always Go Up in the Long Run”

The idea that stocks always go up in the long run is widely believed, but it’s only partially true. While broad market indices like the S&P 500 have historically trended upward over decades, individual stocks don’t always follow this path. Companies can underperform, stagnate, or even go bankrupt. Long-term growth also depends on factors like diversification, time horizon, and market conditions. Simply holding a single stock for years doesn’t guarantee profit. It’s important to invest wisely, regularly reassess your portfolio, and avoid assuming that time alone will protect you from losses. Long-term success requires strategy, not just patience.